Looking Backward

I realize it was only two months ago I took you on some time travel, way back into the history of technology.

Here I go again. I was researching another project, and in the course of it, found some dates that, at least to a degree, dated me, but they also astounded me. So I thought I’d share them with you today.

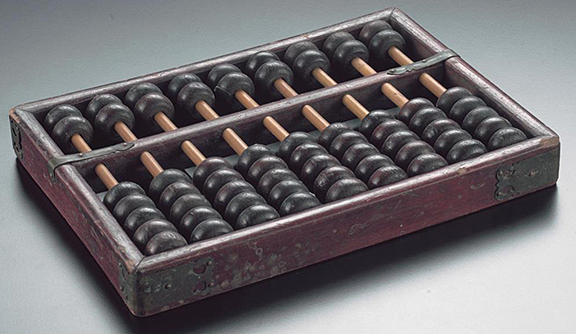

So, let’s start in 1880, shall we? Technically (all puns intended), we could go back to the abacus, as in its own way it was a “computer.” By sliding balls across stretched lines of string or wire between two posts, the user could keep track of numbers. To read an abacus, you simply look at which beads are moved where. Each column represented a place value. To the far right was single digits, the next was 10s place, the next was hundreds, and so on. In some places, people used lines cut into a stick that represented values, more or less along the same principles.

But what happened in 1880? The U.S. population had grown to the extent, and huge numbers were added as a result of also “enumerating” females for the first time, that the “enumerators” were faced with a serious number-crunching challenge. It took seven years to tabulate the result – so the brains at the top began thinking of new ways to solve the problem of huge numbers.

About this time, Herman Hollerith (yes, that Hollerith, if you’re old enough to remember) designed a “punch card” system to calculate the 1880 census. The punch cards bore a remote similarity to the abacus in that the punches on the card represented numbers, and these could be scanned and processed much more quickly than a human being could do, sitting with paper and a pencil. The machinery that resulted from the Hollerith card and the calculating machines eventually became IBM. The 1880 census results were ready in 3 years, and saved the government $5 million dollars.

1939: Hewlett-Packard was founded by David Packard and Bill Hewlett in a Palo Alto, California, garage, having been awarded a contract with Disney performed tests and measurements for the production of Fantasia.

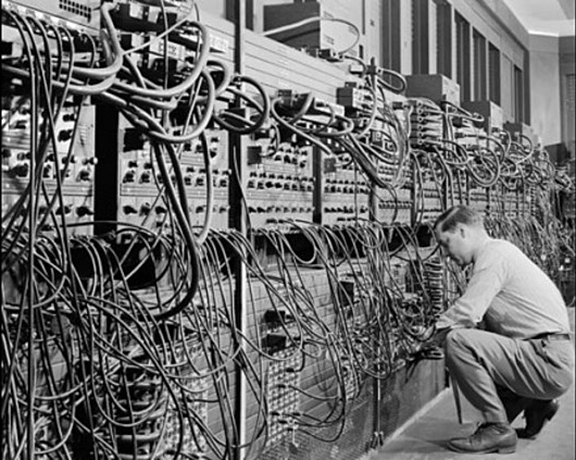

In 1943-1944, two University of Pennsylvania professors, John Mauchly and J. Presper Eckert, built the Electronic Numerical Integrator and Calculator (ENIAC). Now considered to be the original digital computers, it filled a 20-foot by 40-foot room and had 18,000 vacuum tubes. The vacuum tube, children, was something that used to be required for your television set. Invented in 1904, a vacuum tube, was a device that controlled electric current flow in a high vacuum between electrodes to which an electric potential difference had been applied. (Wikipedia)

Then in 1946 Mauchly and Presper left the University of Pennsylvania and with funding from the Census Bureau, built UNIVAC, the first commercial computer for business and government applications.

In 1947 William Shockley, John Bardeen and Walter Brattain of Bell Laboratories invented the transistor. They discovered how to make an electric switch with solid materials and no need for a vacuum. This was a pivotal moment in the history of communications technology, as what had required huge amounts of space to accomplish could now be done using the tiny transistor – and not too much later, teenagers were listening to their own transistor radio – away from big, bulky radio under their parents’ control.

In 1953, Grace Hopper developed the first computer language, known as COBOL. This language was based on files, and consisted of code commands getting a file, opening it, performing some operation on it, closing it, and saving it. It was the coding language that, because nobody much used it anymore, created a challenge as 2000 neared: businesses and government agencies were afraid that computers would “see” something written as 00 or 01 as “1900,” or “1901,” and they scurried to fix the aging code that had initially set dates for any number of events.

Both COBOL and FORTRAN were in use in the mid-1970s, during which time Xerox had opened the Palo Alto Research Center, or PARC, and gathered together the brain trust of computer science. Copiers were big business, and with computer centers growing at every school and business worldwide, the company saw an opportunity.

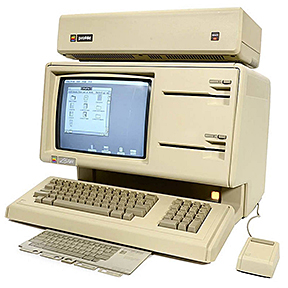

At about this time, Steve Jobs was hard at work on the Lisa and Macintosh computers for Apple. He heard about PARC and the work it was doing with GUI – pronounced “gooey” and short for Graphical User Interface. PARC was developing the Xerox Alto, and meanwhile Bill Gates was working for Apple as a hired hand developing software. He and Jobs and a number of other developers wanted to see Xerox’s GUI, and after seeing it, both men were sure they had seen the future of computing, and though he had promised not to, Gates developed his own graphical interface for the Microsoft operating system, called Windows, while Jobs’ Macintosh didn’t hit the market until a year later. When Jobs accused Gates of theft, Gates replied, “I think it’s more like we both had this rich neighbor named Xerox and I broke into his house to steal the TV set and found out that you had already stolen it.”

Meanwhile, though the IBM debuted its first personal computer, using Microsoft’s MS-DOS operating system, Apple’s Lisa (a relatively big flop) wasn’t introduced until 1983, and the Windows-based computers became popular in about 1985 – but at the same time, Commodore had offered the superior Amiga 1000, which was doing digital editing of video and audio far before its competitors were even dreaming about it. There was an Amiga computer system called the “Video Toaster,” that was the beginning of the digital video editing revolution.

1990: Tim Berners-Lee, a researcher at CERN, the high-energy physics laboratory in Geneva, developed HyperText Markup Language (HTML), and that spawned the “Web.” Remember referring to the “Worldwide Web,” and how difficult it was to always say “double u double u double u” before a web address? HTML is still in use.

1996: Sergey Brin and Larry Page developed the Google search engine at Stanford University. This engine was not alone – there were many others, but Google, whose aim was to index all of the world’s knowledge so that it could be searched, relied on relevance, which meant – if 20 people searched on the term “apples for making pie,” and 19 of them went to a website that offered apple pie recipes and only 1 person went to the Apple Computer website, the apple pie site became more relevant, and thus that site was offered closer to the top of the search results (making it even more likely that the next 100 people who searched on that string would choose it). Many other search engines used different algorithms, some of them quickly offering higher placement for a fee. But users ultimately drove the market, at least for a while, and Google became very popular.

1999: The term Wi-Fi became part of the computing language and users began connecting to the Internet without wires.

2004: Facebook, a social networking site, launched. Originally called “The Facebook,” the platform emulated the handy book upperclassmen were handed each year that introduced them to in-coming freshmen, with a photo, high school, hometown, and perhaps some other information about the new crop of students – a “facebook.” When it was first launched, only Harvard students could use it. Then later other Ivy League schools qualified, and bit by bit, additional users were added but in such a way as to make each new group feel like they had been hand-selected to take part in the Country Club’s, By Invitation Only Tournament. Good marketing. It is now used by almost 2.8 billion people worldwide. And not just once in a while, but many times daily. Facebook was just the first of many Social Media platforms, which now includes Twitter, LinkedIn, Instagram, Snapchat, TikTok, Pinterest, Reddit, YouTube, and WhatsApp – but which ones are in the top ten keep changing (as TikTok seems to be one-upping Instagram in creating “influencers,” and “models,” while new chat/text platforms join the mix almost daily).

2005: YouTube, a video sharing service, was founded.

2006: Apple introduced the MacBook Pro, its first Intel-based, dual-core mobile computer, as well as an Intel-based iMac. Nintendo’s Wii game console hit the market. These devices were moving the needle on hardware significantly.

2007: The iPhone brought many computer functions to the smartphone. This was another game-changing moment, as prior to the iPhone, smart phones were in use, but the iPhone utterly changed human behavior, mostly in developed nations, but literally worldwide.

So, what is the Next Big Thing? This is anyone’s guess, but here are my three:

First: IoT – Internet of Things, which is more or less already here. It refers to a system of interrelated, internet-connected objects that are able to collect and transfer data over a wireless network without human intervention.

Examples: Connected appliances. Smart home security systems. Autonomous farming equipment. Wearable health monitors. Smart factory equipment. Wireless inventory trackers. Ultra-high speed wireless internet. Biometric cybersecurity scanners.

Maybe you remember a movie in which a person walking down the street would have their eye scanned, and then an ad would be tailored just for them and show up on the walls of the building they’re walking by? Well, guess what – a company called Eyelock has created an iris-based identity authentication technology, offering products that serve the automotive, financial, mobile and healthcare sectors.

Second: AI – or Artificial Intelligence.

Have you seen the robots that can now move smoothly like humans? Did you know that in Japan, you can purchase an infant – and many women, especially of grandmother age, have them rather than real babies? You can also purchase a boyfriend or girlfriend – enough said. Health monitoring, warnings, anticipated states, are all part of AI.

Third: CRISPR offers genetic modification. Short sequences of genetic material that can do specific things to enhance the biological entity. This one has a big downside possibility.

Finally, Blockchain: We covered this powerful distributed ledger in a previous article, so I’ll leave that one here, only to say that if you can create currency, you have created a disruptive technology of monumental proportions.

And, I’ll leave you to ponder the wonders that have yet to be shown to us, but are no doubt percolating in the minds of some brilliant inventor, somewhere in the world.